In the previous post about Web Scraping with Python we talked a bit about Scrapy. In this post we are going to dig a little bit deeper into it.

- Simple Web Scraper Free

- Simple Web Scraper Login

- Simple Web Scraper Tutorial

- Instant Data Scraper

- Simple Web Scraper

Scrapy is a wonderful open source Python web scraping framework. It handles the most common use cases when doing web scraping at scale:

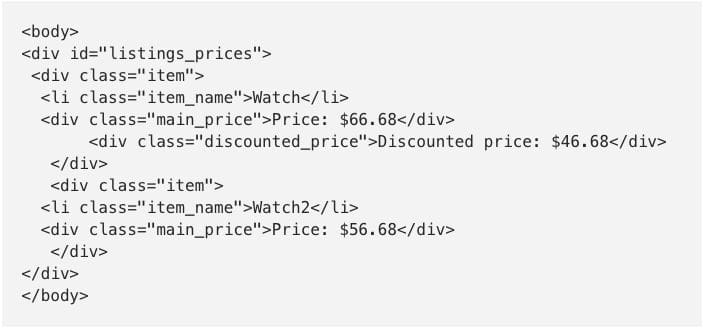

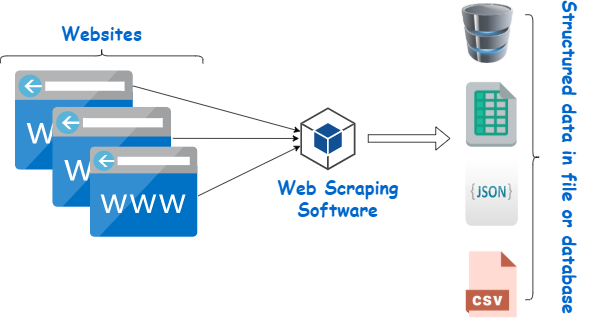

Web scraping (also termed web data extraction, screen scraping, or web harvesting) is a technique of extracting data from the websites. It turns unstructured data into structured data that can be stored into your local computer or a database. It can be difficult to build a web scraper for people who don’t know anything about coding.

- Multithreading

- Crawling (going from link to link)

- Extracting the data

- Validating

- Saving to different format / databases

- Many more

The main difference between Scrapy and other commonly used librairies like Requests / BeautifulSoup is that it is opinionated. It allows you to solve the usual web scraping problems in an elegant way.

The downside of Scrapy is that the learning curve is steep, there is a lot to learn, but that is what we are here for :)

In this tutorial we will create two different web scrapers, a simple one that will extract data from an E-commerce product page, and a more “complex” one that will scrape an entire E-commerce catalog!

Scrapy is a free open-source web-crawling framework written in Python. Originally designed for web scraping, it can also be used to extract data using APIs or as a general-purpose web crawler. Who should use this web scraping tool? Scrapy is for developers and tech companies with Python knowledge. Web scraping made easy — a powerful and free Chrome extension for scraping websites in your browser, automated in the cloud, or via API. No code required. Who is this for: developers who are proficient at programming to build a web. Scraper is a data extractor and converter which can harvest emails or any other text from web pages. NoCoding Data Scraper assists you to define recipes and task by using CSS Selector to identify the information in the HTML page.

Basic overview

You can install Scrapy using pip. Be careful though, the Scrapy documentation strongly suggests to install it in a dedicated virtual environnement in order to avoid conflicts with your system packages.

I'm using Virtualenv and Virtualenvwrapper:

and

You can now create a new Scrapy project with this command:

This will create all the necessary boilerplate files for the project.

Here is a brief overview of these files and folders:

- items.py is a model for the extracted data. You can define custom model (like a Product) that will inherit the scrapy Item class.

- middlewares.py Middleware used to change the request / response lifecycle. For example you could create a middle ware to rotate user-agents, or to use an API like ScrapingBee instead of doing the requests yourself.

- pipelines.py In Scrapy, pipelines are used to process the extracted data, clean the HTML, validate the data, and export it to a custom format or saving it to a database.

- /spiders is a folder containing Spider classes. With Scrapy, Spiders are classes that define how a website should be scraped, including what link to follow and how to extract the data for those links.

- scrapy.cfg is a configuration file to change some settings

Scraping a single product

In this example we are going to scrape a single product from a dummy E-commerce website. Here is the first the product we are going to scrape:

https://clever-lichterman-044f16.netlify.com/products/taba-cream.1/

We are going to extract the product name, picture, price and description.

Scrapy Shell

Scrapy comes with a built-in shell that helps you try and debug your scraping code in real time. You can quickly test your XPath expressions / CSS selectors with it. It's a very cool tool to write your web scrapers and I always use it!

You can configure Scrapy Shell to use another console instead of the default Python console like IPython. You will get autocompletion and other nice perks like colorized output.

In order to use it in your scrapy Shell, you need to add this line to your scrapy.cfg file:

Simple Web Scraper Free

Once it's configured, you can start using scrapy shell:

We can start fetching a URL by simply:

This will start by fetching the /robot.txt file.

In this case there isn't any robot.txt, that's why we can see a 404 HTTP code. If there was a robot.txt, by default Scrapy will follow the rule.

You can disable this behavior by changing this setting in settings.py:

Don bradman cricket 2014 free download for pc. Then you should should have a log like this:

You can now see your response object, response headers, and try different XPath expression / CSS selectors to extract the data you want.

You can see the response directly in your browser with:

Note that the page will render badly inside your browser, for lots of different reasons. This can be CORS issues, Javascript code that didn't execute, or relative URLs for assets that won't work locally.

The scrapy shell is like a regular Python shell, so don't hesitate to load your favorite scripts/function in it.

Extracting Data

Scrapy doesn't execute any Javascript by default, so if the website you are trying to scrape is using a frontend framework like Angular / React.js, you could have trouble accessing the data you want.

Now let's try some XPath expression to extract the product title and price:

In order to extract the price, we are going to use an XPath expression, we're selecting the first span after the div with the class my-4

I could also use a CSS selector:

Creating a Scrapy Spider

With Scrapy, Spiders are classes where you define your crawling (what links / URLs need to be scraped) and scraping (what to extract) behavior.

Here are the different steps used by a spider to scrape a website:

- It starts by looking at the class attribute

start_urls, and call these URLs with the start_requests() method. You could override this method if you need to change the HTTP verb, add some parameters to the request (for example, sending a POST request instead of a GET). - It will then generate a Request object for each URL, and send the response to the callback function parse()

- The parse() method will then extract the data (in our case, the product price, image, description, title) and return either a dictionnary, an Item object, a Request or an iterable.

You may wonder why the parse method can return so many different objects. It's for flexibility. Let's say you want to scrape an E-commerce website that doesn't have any sitemap. You could start by scraping the product categories, so this would be a first parse method.

This method would then yield a Request object to each product category to a new callback method parse2()For each category you would need to handle pagination Then for each product the actual scraping that generate an Item so a third parse function.

With Scrapy you can return the scraped data as a simple Python dictionary, but it is a good idea to use the built-in Scrapy Item class.It's a simple container for our scraped data and Scrapy will look at this item's fields for many things like exporting the data to different format (JSON / CSV…), the item pipeline etc.

So here is a basic Product class:

Simple Web Scraper Login

Now we can generate a spider, either with the command line helper:

Or you can do it manually and put your Spider's code inside the /spiders directory.

There are different types of Spiders in Scrapy to solve the most common web scraping use cases:

Spiderthat we will use. It takes a start_urls list and scrape each one with aparsemethod.CrawlSpiderfollows links defined by a set of rulesSitemapSpiderextract URLs defined in a sitemap- Many more

In this EcomSpider class, there are two required attributes:

namewhich is our Spider's name (that you can run usingscrapy runspider spider_name)start_urlswhich is the starting URL

The allowed_domains is optionnal but important when you use a CrawlSpider that could follow links on different domains.

Then I've just populated the Product fields by using XPath expressions to extract the data I wanted as we saw earlier, and we return the item.

You can run this code as follow to export the result into JSON (you could also export to CSV)

You should then get a nice JSON file:

Item loaders

There are two common problems that you can face while extracting data from the Web:

- For the same website, the page layout and underlying HTML can be different. If you scrape an E-commerce website, you will often have a regular price and a discounted price, with different XPath / CSS selectors.

- The data can be dirty and need some kind of post processing, again for an E-commerce website it could be the way the prices are displayed for example ($1.00, $1, $1,00 )

Scrapy comes with a built-in solution for this, ItemLoaders.It's an interesting way to populate our Product object.

You can add several XPath expression to the same Item field, and it will test it sequentially. By default if several XPath are found, it will load all of them into a list.

You can find many examples of input and output processors in the Scrapy documentation.

Hiren boot 10.6 usb. It's really useful when you need to transorm/clean the data your extract.For example, extracting the currency from a price, transorming a unit into another one (centimers in meters, Celcius degres in Fahrenheit) …

In our webpage we can find the product title with different XPath expressions: //title and //section[1]//h2/text()

Here is how you could use and Itemloader in this case:

Generally you only want the first matching XPath, so you will need to add this output_processor=TakeFirst() to your item's field constructor.

In our case we only want the first matching XPath for each field, so a better approach would be to create our own ItemLoader and declare a default output_processor to take the first matching XPath:

I also added a price_in which is an input processor to delete the dollar sign from the price. I'm using MapCompose which is a built-in processor that takes one or several functions to be executed sequentially. You can add as many functions as you like for . The convention is to add _in or _out to your Item field's name to add an input or output processor to it.

There are many more processors, you can learn more about this in the documentation

Scraping multiple pages

Now that we know how to scrape a single page, it's time to learn how to scrape multiple pages, like the entire product catalog.As we saw earlier there are different kinds of Spiders.

When you want to scrape an entire product catalog the first thing you should look at is a sitemap. Sitemap are exactly built for this, to show web crawlers how the website is structured.

Most of the time you can find one at base_url/sitemap.xml. Parsing a sitemap can be tricky, and again, Scrapy is here to help you with this.

In our case, you can find the sitemap here: https://clever-lichterman-044f16.netlify.com/sitemap.xml

If we look inside the sitemap there are many URLs that we are not interested by, like the home page, blog posts etc:

Fortunately, we can filter the URLs to parse only those that matches some pattern, it's really easy, here we only to have URL thathave /products/ in their URLs:

You can run this spider as follow to scrape all the products and export the result to a CSV file:scrapy runspider sitemap_spider.py -o output.csv

Now what if the website didn't have any sitemap? Once again, Scrapy has a solution for this!

Let me introduce you to the… CrawlSpider.

The CrawlSpider will crawl the target website by starting with a start_urls list. Then for each url, it will extract all the links based on a list of Rule.In our case it's easy, products has the same URL pattern /products/product_title so we only need filter these URLs.

As you can see, all these built-in Spiders are really easy to use. It would have been much more complex to do it from scratch.

Download revo uninstaller pro full crack. With Scrapy you don't have to think about the crawling logic, like adding new URLs to a queue, keeping track of already parsed URLs, multi-threading…

Conclusion

In this post we saw a general overview of how to scrape the web with Scrapy and how it can solve your most common web scraping challenges. Of course we only touched the surface and there are many more interesting things to explore, like middlewares, exporters, extensions, pipelines!

If you've been doing web scraping more “manually” with tools like BeautifulSoup / Requests, it's easy to understand how Scrapy can help save time and build more maintainable scrapers.

I hope you liked this Scrapy tutorial and that it will motivate you to experiment with it.

For further reading don't hesitate to look at the great Scrapy documentation.

We have also published our custom integration with Scrapy, it allows you to execute Javascript with Scrapy, do not hesitate to check it out.

You can also check out our web scraping with Python tutorial to learn more about web scraping.

Happy Scraping!

Wednesday, January 20, 2021There are many free web scraping tools. However, not all web scraping software is for non-programmers. The lists below are the best web scraping tools without coding skills at a low cost. The freeware listed below is easy to pick up and would satisfy most scraping needs with a reasonable amount of data requirement.

Table of content

Web Scraper Client

1. Octoparse

Octoparse is a robust web scraping tool which also provides web scraping service for business owners and Enterprise. As it can be installed on both Windows and Mac OS, users can scrape data with apple devices.Web data extraction includes but not limited to social media, e-commerce, marketing, real estate listing and many others. Unlike other web scrapers that only scrape content with simple HTML structure, Octoparse can handle both static and dynamic websites with AJAX, JavaScript, cookies and etc. You can create a scraping task to extract data from a complex website such as a site that requires login and pagination. Octoparse can even deal with information that is not showing on the websites by parsing the source code. As a result, you can achieve automatic inventories tracking, price monitoring and leads generating within fingertips.

Octoparse has the Task Template Mode and Advanced Mode for users with both basic and advanced scraping skills.

- A user with basic scraping skills will take a smart move by using this brand-new feature that allows him/her to turn web pages into some structured data instantly. The Task Template Mode only takes about 6.5 seconds to pull down the data behind one page and allows you to download the data to Excel.

- The Advanced mode has more flexibility comparing the other mode. This allows users to configure and edit the workflow with more options. Advance mode is used for scraping more complex websites with a massive amount of data. With its industry-leading data fields auto-detectionfeature, Octoparse also allows you to build a crawler with ease. If you are not satisfied with the auto-generated data fields, you can always customize the scraping task to let itscrape the data for you.The cloud services enable to bulk extract huge amounts of data within a short time frame since multiple cloud servers concurrently run one task. Besides that, thecloud servicewill allow you to store and retrieve the data at any time.

2. ParseHub

Parsehub is a great web scraper that supports collecting data from websites that use AJAX technologies, JavaScript, cookies and etc. Parsehub leverages machine learning technology which is able to read, analyze and transform web documents into relevant data.

The desktop application of Parsehub supports systems such as Windows, Mac OS X, and Linux, or you can use the browser extension to achieve an instant scraping. It is not fully free, but you still can set up to five scraping tasks for free. The paid subscription plan allows you to set up at least 20 private projects. There are plenty of tutorials for at Parsehub and you can get more information from the homepage.

3. Import.io

Import.io is a SaaS web data integration software. It provides a visual environment for end-users to design and customize the workflows for harvesting data. It also allows you to capture photos and PDFs into a feasible format. Besides, it covers the entire web extraction lifecycle from data extraction to analysis within one platform. And you can easily integrate into other systems as well.

4. Outwit hub

Outwit hub is a Firefox extension, and it can be easily downloaded from the Firefox add-ons store. Once installed and activated, you can scrape the content from websites instantly. It has an outstanding 'Fast Scrape' features, which quickly scrapes data from a list of URLs that you feed in. Extracting data from sites using Outwit hub doesn’t demand programming skills. The scraping process is fairly easy to pick up. You can refer to our guide on using Outwit hub to get started with web scraping using the tool. It is a good alternative web scraping tool if you need to extract a light amount of information from the websites instantly.

Simple Web Scraper Tutorial

Web Scraping Plugins/Extension

1. Data Scraper (Chrome)

Data Scraper can scrape data from tables and listing type data from a single web page. Its free plan should satisfy most simple scraping with a light amount of data. The paid plan has more features such as API and many anonymous IP proxies. You can fetch a large volume of data in real-time faster. You can scrape up to 500 pages per month, you need to upgrade to a paid plan.

2. Web scraper

Web scraper has a chrome extension and cloud extension. For chrome extension, you can create a sitemap (plan) on how a website should be navigated and what data should be scrapped. The cloud extension is can scrape a large volume of data and run multiple scraping tasks concurrently. You can export the data in CSV, or store the data into Couch DB.

3. Scraper (Chrome)

The scraper is another easy-to-use screen web scraper that can easily extract data from an online table, and upload the result to Google Docs.

Just select some text in a table or a list, right-click on the selected text and choose 'Scrape Similar' from the browser menu. Then you will get the data and extract other content by adding new columns using XPath or JQuery. This tool is intended for intermediate to advanced users who know how to write XPath.

Web-based Scraping Application

1. Dexi.io (formerly known as Cloud scrape)

Dexi.io is intended for advanced users who have proficient programming skills. It has three types of robots for you to create a scraping task - Extractor, Crawler, and Pipes. It provides various tools that allow you to extract the data more precisely. With its modern feature, you will able to address the details on any websites. For people with no programming skills, you may need to take a while to get used to it before creating a web scraping robot. Check out their homepage to learn more about the knowledge base.

The freeware provides anonymous web proxy servers for web scraping. Extracted data will be hosted on Dexi.io’s servers for two weeks before archived, or you can directly export the extracted data to JSON or CSV files. It offers paid services to meet your needs for getting real-time data.

2. Webhose.io

Instant Data Scraper

Webhose.io enables you to get real-time data from scraping online sources from all over the world into various, clean formats. You even can scrape information on the dark web. This web scraper allows you to scrape data in many different languages using multiple filters and export scraped data in XML, JSON, and RSS formats.

The freeware offers a free subscription plan for you to make 1000 HTTP requests per month and paid subscription plans to make more HTTP requests per month to suit your web scraping needs.

Simple Web Scraper

Author: Ashley Ashley is a data enthusiast and passionate blogger with hands-on experience in web scraping. She focuses on capturing web data and analyzing in a way that empowers companies and businesses with actionable insights. Read her blog here to discover practical tips and applications on web data extraction 日本語記事:無料で使えるWebスクレイピングツール9選 |